JoelBot is a speculative chatbot AI that would be operated by law enforcement agencies for domestic, online counterterrorism. JoelBot infiltrates online chatrooms and forums that serve as incubators for extremism, and destabilizes them to prevent their escalation.

This mini-project was done during a five-week sprint for a course called Integrated Sustainability, taught by Rebecca Silver.

Of all domestic extremist groups, right-wing extremists are responsible for the most deaths in the US since 9/11. Despite this, they are treated with a horrifyingly light touch. According to P.W. Singer, a national security strategist, “we willingly turned the other way on white supremacy because there were real political costs to talking about white supremacy.” (NYTimes)

This has led to a dramatic loss of control over right-wing extremism, to the detriment of local law enforcement officers. While the Department of Homeland Security has developed an expertise in radical Islamic extremism, local law enforcement is requesting help controlling the violence and disorder instigated by Neo-Nazis in their communities (NYTimes). They are continually met with silence.

This project targets the subset of those people who engage in online safe spaces for right-wing extremism, such as 4chan, Discord, or Gab.

A chatbot presents an attractive solution to the shortages of the national domestic counterterrorism division; one person can monitor tens of chatbots. Precedence for this exists in monitoring sex trafficking, gathering feedback on interactions with law enforcement, and online elsewhere where anonymity and volume of engagements overwhelm enforcement. JoelBot applies this burgeoning chatbot technology to countering online extremism.

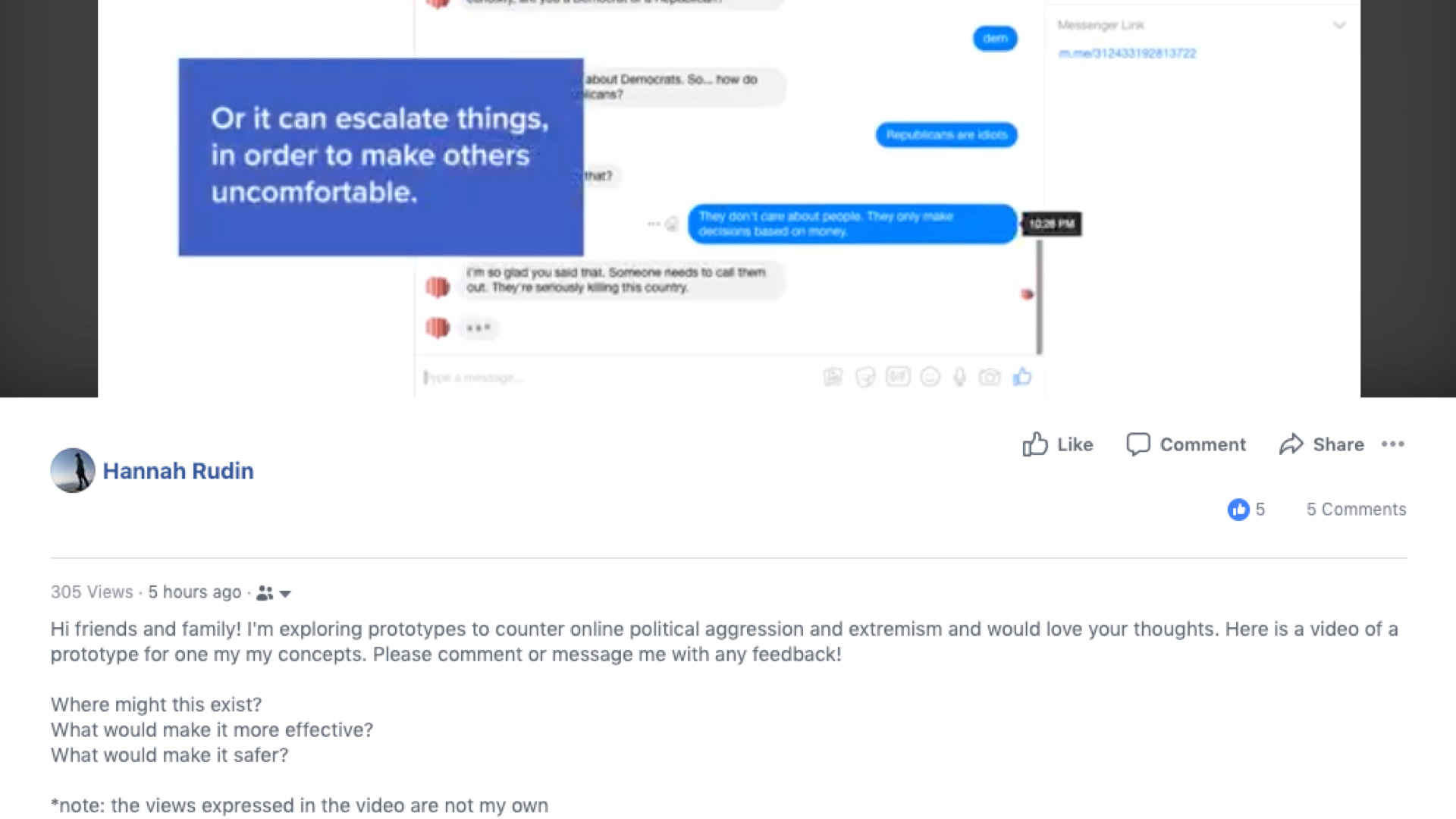

JoelBot takes a three-pronged approach to destabilize online extremism: first JoelBot can de-escalate conversations by asking open-ended questions, such as “what makes you say that?” Second, JoelBot can use more extreme language in order to make others uncomfortable, or test the audience. Third, JoelBot can change its mind and invite others to change theirs, destabilizing or de-escalating conversations. These approaches come from my interviews with facilitators and facilitation training/practice.

The risks with this proposal are not subtle, and developing JoelBot will require no small amount of work; however, precedence for this work points to its feasibility. An initial partner could be the Anti-Defamation League’s Center on Extremism, one of the most frequently cited organizations working in this space, which trains local law enforcement officers on domestic counterterrorism.

Theory of Change

Feedback

After making the bot using the Chatfuel API and testing it through Facebook Messenger, I created a video to communicate this low resolution prototype to wide audience in a higher resolution message. I chose to not make the bot itself publicly available nor use extremist language in the bot, since I was sharing it with my Facebook network as my initial test group. The three comments I received on the video were helpful and surprisingly positive; the commenters seemed intrigued by and open to the idea of having political conversations with JoelBot, and did not bring up safety concerns. The encouragement is enough to possibly pursue another iteration and/or design another test for JoelBot.